For the first time since the widespread adoption of the internet, a technology has emerged that seems poised to change our daily lives. In many ways, it already has. But as with any revolution, there are some who feel they have been dragged unwillingly into this future, without consent, representation, or compensation.

Artificial Intelligence (AI) is commonly defined as the simulation of human intelligence by machines, particularly computer systems. It involves creating algorithms and models that enable machines to perform tasks that typically require “human” intelligence- learning, reasoning, problem-solving, perception, language understanding. It is already a part of our daily lives- every time you unlock your phone (facial recognition), chatbots, and fraud detection are all examples of AI.

For AI to work, it must be exposed to input data, or trained. Training an AI model refers to the process of teaching a machine learning system to perform specific tasks by feeding it large amounts of data and using algorithms to adjust the model's internal parameters based on that data. The goal is for the model to learn patterns, relationships, or representations that enable it to make predictions, classifications, or other decisions when presented with new, unseen inputs.

Leica's new M11-P includes Content Credentials written to every image created source: Leica

Leica's new M11-P includes Content Credentials written to every image created source: Leica

The main issue many artists have with AI models being trained on their work revolves around consent, compensation, and attribution. AI models are often trained on large datasets scraped from the internet, which may include copyrighted artworks. Many artists argue that their work is being used without their permission, violating their intellectual property rights. Artists do not receive payment or royalties when their work is used to train AI models, even though the models may generate revenue for companies or individuals who use them. Artists may not wish for a model to be trained on their work.

There have been efforts to combat the unrestricted use of work to train models. Groups have sued large AI companies like OpenAI. There has also been a call to revise and strengthen copyright laws so they explicitly address AI, and other legislative or executive actions at both the state and federal level. While both progress slowly, a movement within the industry has gained some traction.

The Content Authenticity Initiative (CAI) is a project spearheaded by Adobe in collaboration with various tech companies, media organizations, and advocacy groups. The initiative aims to "promote transparency in digital content creation and distribution by providing a secure framework to verify the creation and modification of digital assets."

Major camera brands, including Sony, Canon, Nikon, Fujifilm, and Leica, have integrated or committed to supporting Content Credentials. Platforms such as TikTok and Google have joined the Content Authenticity Initiative and the Coalition for Content Provenance and Authenticity (C2PA). Adobe Creative Cloud apps like Photoshop and Lightroom support Content Credentials, providing creators with tools to protect their work and ensure proper attribution. Adobe Firefly and Amazon Titan Image Generator, among others, use Content Credentials to indicate provenance and respect creators’ rights. Adobe’s tools also allow creators to specify their preferences for generative AI training, ensuring their work is not used without consent.

Some of the companies involved in the Content Authenticity Initiative

Some of the companies involved in the Content Authenticity Initiative

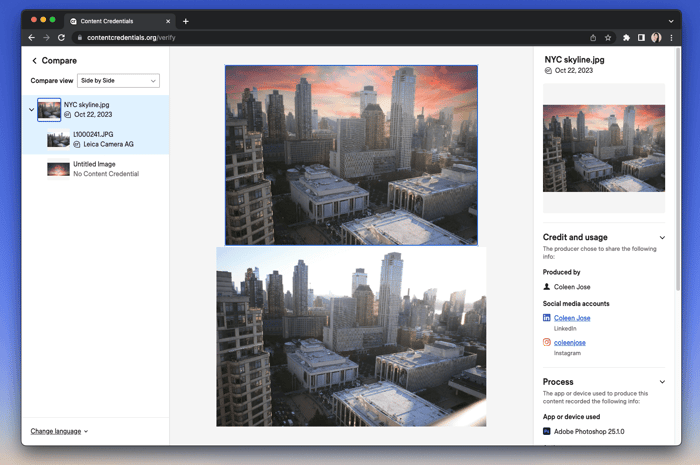

The backbone of Content Authenticity Initiative is provenance metadata, which is cryptographically encoded metadata in the file that can show who created the work and how it’s been modified. Provenance metadata includes details such as the creator’s name, date and location of creation, and information about editing tools used. As the file is digitally altered, that information is added to the metadata. Content Credentials document the editing history, providing insight into how an image has been altered. This is especially useful for identifying manipulated content or fake media, ensuring authenticity. Creators can use Content Credentials to signal that their content should not be used for training AI models. Platforms and tools that respect these signals (like Adobe Firefly) exclude such images from training datasets.

A simple comparison of the components of durable Content Credentials, and their strength in combination. source: CAI

A simple comparison of the components of durable Content Credentials, and their strength in combination. source: CAIThis should solve the three primary concerns of creators. The author of a work can be determined by the metadata, who then should in theory be able to also agree to certain parameters of how their work is used (similar to a Creative Commons license). It also creates trust in viewers, who should be able to identify if an image has been manipulated. With the proliferation of deep fakes across the internet (add link), the ability to detect this is huge with a company like Facebook, in building trust with its consumers. AI companies will be able to be transparent with how their model has been trained. For a for-profit company, this may or may not be desirable.

Content Credentials are updated as changes are made to a file source: CAI

Content Credentials are updated as changes are made to a file source: CAIFor the Content Authenticity Initiative to be effective, it faces some obstacles. Not all platforms, tools, or content creators adopt CAI standards, limiting its effectiveness. Many social media platforms and websites still strip metadata when content is uploaded. Content Authenticity Initiative relies on platforms like TikTok and Adobe to honor metadata and respect preferences, such as not training AI models on specific content. Not all companies may comply without legal mandates. And while Content Credentials can label AI-generated content, it is still challenging to fully prevent malicious use or ensure all manipulated content is flagged.

Content Authenticity Initiative appears to be the best chance currently of creating market pressure for Authorship and the rights of creators to be implemented. Buy in from leading companies across industries is crucial. will this be enough to prevent further legislation on the issue, or will copyright laws and successful lawsuits ultimately force the regulation of AI?

*some of this article was generated with AI. Can you tell what?